On March 2024, the Italian Data Protection Authority (“Italian DPA”) has issued a new digest (“Digest”) relating to the processing of personal data, whether or not concerning health data pursuant to section 9 of the GDPR, carried out through the utilization of platforms, accessible through apps or web pages (“Platforms”), that aim to facilitate connection between healthcare professionals (“HCPs”) and patients.

The use of such Platforms poses high risks to the protection and security of patients’ personal data, and in particular health-related data, given that the latter are subject to an enhanced protection regime set forth by section 9 of the GDPR.

The Digest seeks to summarize the applicable data protection rules that may be followed, and defines the roles of the parties, as well as the legal bases, applicable to (i) the processing of personal data of the users by Platform’s owners; (ii) the processing of HCP’s personal data by Platform’s owners; and (iii) the processing of health data of the patients by the Platform’s owner and by the HCPs.

Additional guidance is provided as to:

- The necessity for the Platform’s owner to carry out (and periodically update) a data protection impact assessment (DPIA) pursuant to section 35 GDPR, since the use of Platforms determine a “high risk” processing of personal data, as such kind of treatment automatically meets the criteria issued by the European Data Protection Board for the identification of the list of data processing that may be deemed subject to the duty to perform a DPIA;

- Which information notices should be provided, by who and to whom, as well as the contents that such information notices should have in each case, according to sections 13 and 14 GDPR;

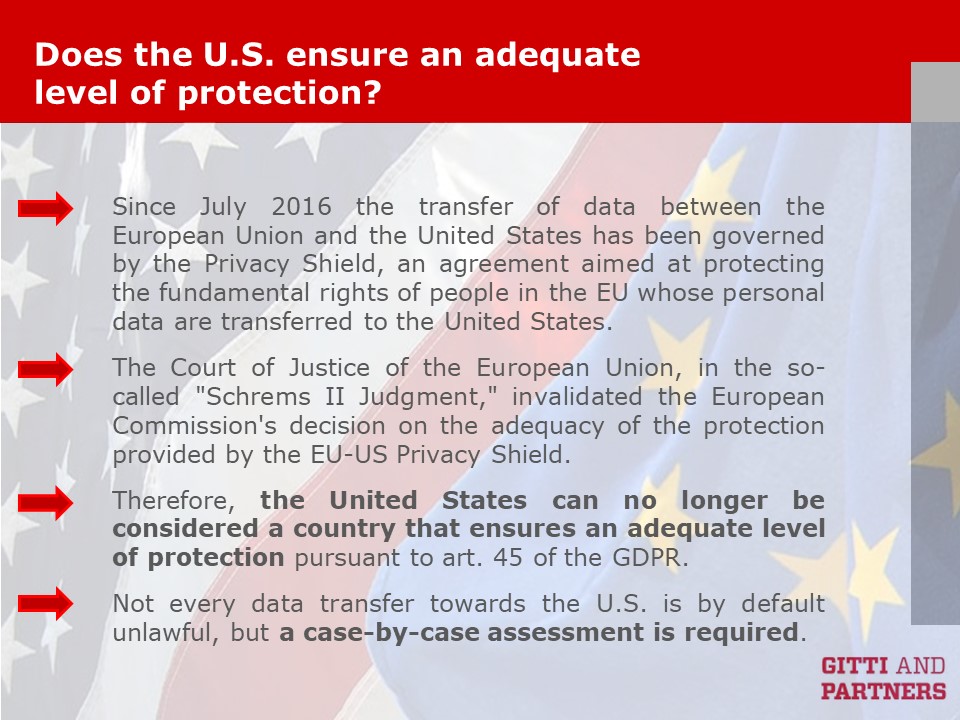

- The specific rules applicable to cross-border data transfers and data transfer to third countries.

Lastly, the Digest includes a list of the most common measures that are taken by the data controllers to ensure an appropriate level of technical and organizational measures to meet the GDPR requirements, such as encryption, verification of the qualification of the HCPs that seek to enroll within the Platform; strengthened authentication systems, monitoring systems aimed at preventing unauthorized access or loss of data.

The Digest should be very welcomed by the Platform’s owners, as it now gives a reliable and complete legal frame that may be followed in order to set up a Platform in a way which is compliant with the GDPR principles.

You must be logged in to post a comment.